Northstar ai

"YOU SET YOUR NORTHSTAR, WE HELP DELIVER YOU THERE"

IT’S TIME TO ALIGN YOUR RESOURCES TO ACHIEVE YOUR BUSINESS GOALS

THE CHALLENGE

HOW DO YOU GET A GROUP WORKING TOWARDS THE SAME ORGANIZATION GOAL?

CULTURE

As our organizations grow larger and distances increase, the task gets more difficult

OUR SOLUTION

What if you had access to an Army of 300 million people to help?

AI on Call: NORTHSTAR AI

Based on George C. Marshall’s WW2 Victory Plan and his Marshall Plan, an AI agent was created.

Marshall's 1942 plan was to design engineer a 300 million human reinforcement learning model that worked to win a world war and then rebuilt it back.

George Marshall's "Victory Plan"

Human neural network with emergence hive decision logic

Fed by battlefield intelligence and supply status

Acceptance testing by battle results

Back channel relearning to Northstar: Defeat Germany; Hold Japan

300 million individuals in trust - alignment goal feedback

300 MILLION LARGE SCALE HUMAN REINFORCEMENT LEARINING MODEL

"COMPARED TO WAR, ALL OTHER FORMS OF HUMAN ENDEAVORS SHRINK TO INSIGNIFICANCE"

PATTON

Northstar AI

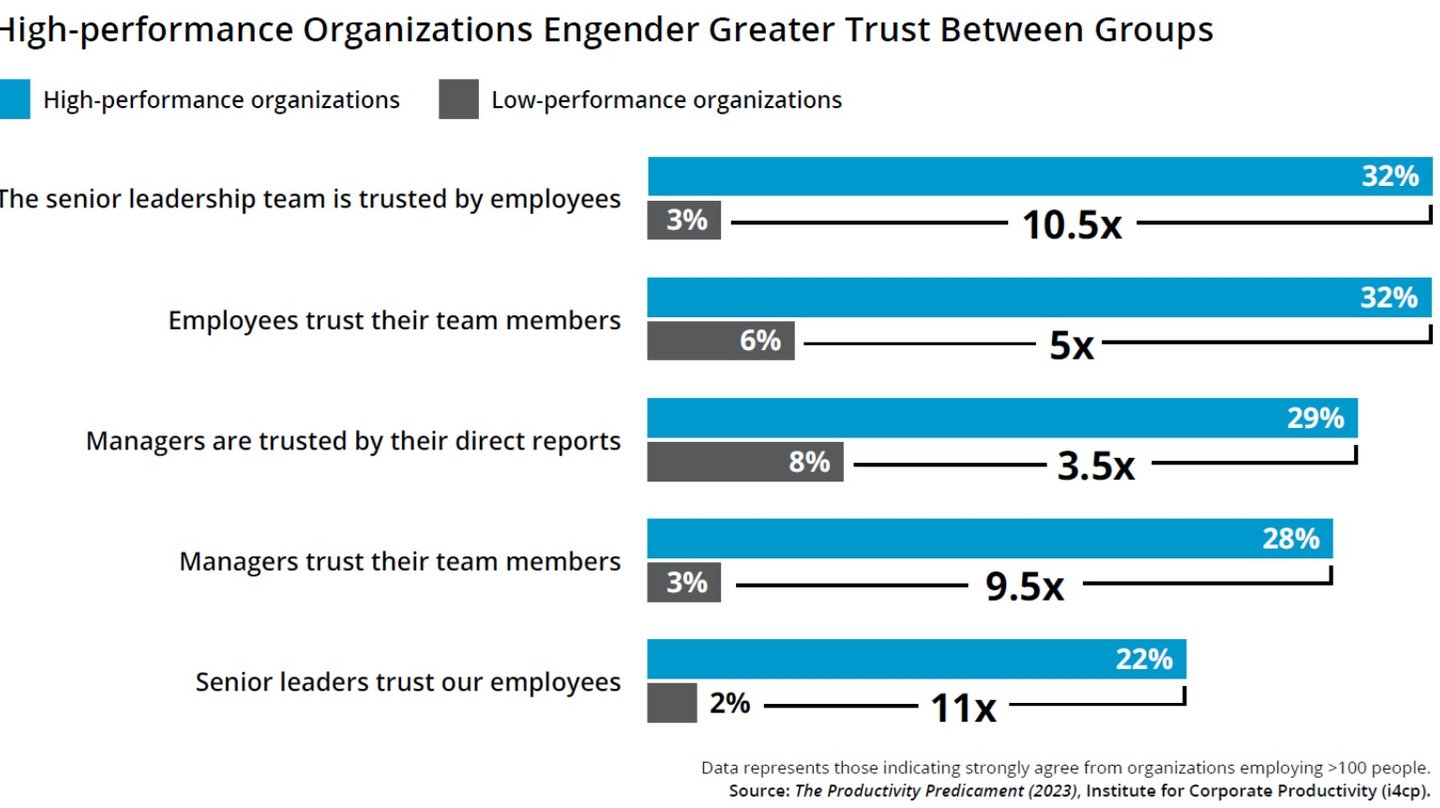

Trust <>Alignment

Trust is an invisible belief, but alignment is an action that can be measured, tested and RESOURCED MANAGED.

Debit <> Credit

Third-party audit trail, Accountability

Back channel to Northstar goal Alignment resource action

Accept <> Reject

Binomial Acceptance Testing

Storytelling for each event to communicate and build trust culture.

DATA CLEAN ROOMS EXTRACT REAL-WORLD TRUST DATA

AI TRAINING DATA IS THE NEW OIL

AI SYSTEMS OPTIMIZE FOR SHORT-TERM AT EXPENSE OF SUSTAINABILITY

FORMATION OF BETTER HUMAN SIMULATION LAYERS FOR MULTI-AGENT PLATFORMS

TRUST/ALIGNMENT IS OMNI-DOMAIN: HEALTH, FINANCIAL, SUPPLY-CHAIN

PROJECTED MARKET VALUE FOR AI SAFETY

ALIGNMENT AND TRUST DATA: SYSTEMS OF RECORD

AI SAFETY DATA FOR EXISTENTIAL THREAT MITIGATION: RISK HIGH/INVESTMENT LOW

"Before we can trust AI, we must trust ourselves."

Why this worked: individual goals were tethered aligned

Feedback loop active to meet dynamic uncertainties

Each small job innovating to meet a chaotic landscape

System of shared goals = Trust

AI Learning Reinforcement Model

Human Learning Reinforcement Model

Exchange Feedback Alignment Loop Dual Kaizen

When AI goals align with human goals = Trust Safety

Storytelling and Scores

Collect Trust pixel data for AI deep learning

PRODUCT VISION: AGENT WITHIN EXISTING PLATFORMS

Amazon SageMaker

• VMware and

• SAP SuccessFactors

• Alignment tool for critical mergers and acquisitions

THE CUSTOMER SO WHAT

FOLLOW US ON INSTAGRAM

Phil Napala – Founder & CEO

Falls Church, VA

Logistics & Mission Assurance Expertise:

Navy Intern Logistics Program, Mechanicsburg, PA

Naval Air Systems Command, Arlington, VA

Army Materiel Command, Alexandria, VA

Office of the Secretary of Defense, Production and Logistics

NASA Headquarters, Safety Mission Assurance Quality

Notable Achievements:

Lead Auditor HQ NASA Safety Mission Assurance Quality Review Team (3 years)

Projects on Moon, Mars, and Asteroid Eros

Work displayed M2-F3 lifting body in Smithsonian Air and Space Museum

Visual artist exhibited alongside Warhol, Rockwell, Dalí

"Before we can begin to trust AI, we must trust ourselves."